Your Viewers Are Scrolling Past Great Content

Vionlabs’ emotion-aware AI understands mood, pacing, and storytelling - so your platform can surface content that truly resonates with every viewer.

.png)

Trusted by leading streaming platforms and media companies:

Without Violabs

Your catalog is full of great stories. But viewers can’t find the right one.

Generic metadata that treats every title the same

Static thumbnails that don’t reflect the mood or story

Manual editorial curation that can’t scale

Recommendations based on genre alone – viewers scroll and leave

Ad placements that interrupt the story and frustrate viewers

With Violabs

We make your entire content library discoverable, engaging, and revenue-ready

Emotion-aware AI that understands mood, pacing, characters, and narrative arc

Mood-matched thumbnails & preview clips generated for every title automatically

AI-curated editorial lists with 90%+ catalog coverage – continuously updated

Story-driven discovery that surfaces content matching how viewers want to feel

Non-intrusive ad breaks at natural story moments, with contextual targeting

300M+

Happy Viewers

64%

Higher Content Discovery

99+

Languages Supported

20+

Media Companies

“The ability to better serve our audiences based on understanding key content characteristics, like mood, will change the way we deliver content to our subscribers. Given Vionlabs’ industry-first technology, we’ve signed up to work with this platform for years to come.”

Dr. Jörg Richartz

Head of TV Strategy

What can you do with Vionlabs?

Three Labs. One Platform. From raw content to viewer experience.

Editoral lab

Сreative lab

Operations lab

Editorial Lab

Turn Your Catalog Into a Discovery Engine

Our AI analyzes the emotional DNA of every title – mood, pacing, intensity, character dynamics – and transforms it into structured, actionable metadata. Build mood-based editorial lists that cover 90%+ of your catalog automatically.

Emotion, mood, genre & theme tagging at scale

AI-curated Smartlists with 90%+ catalog coverage

Collaborative editorial workflows

Multilingual long- and short-form synopsis

Learn more about Editorial Lab

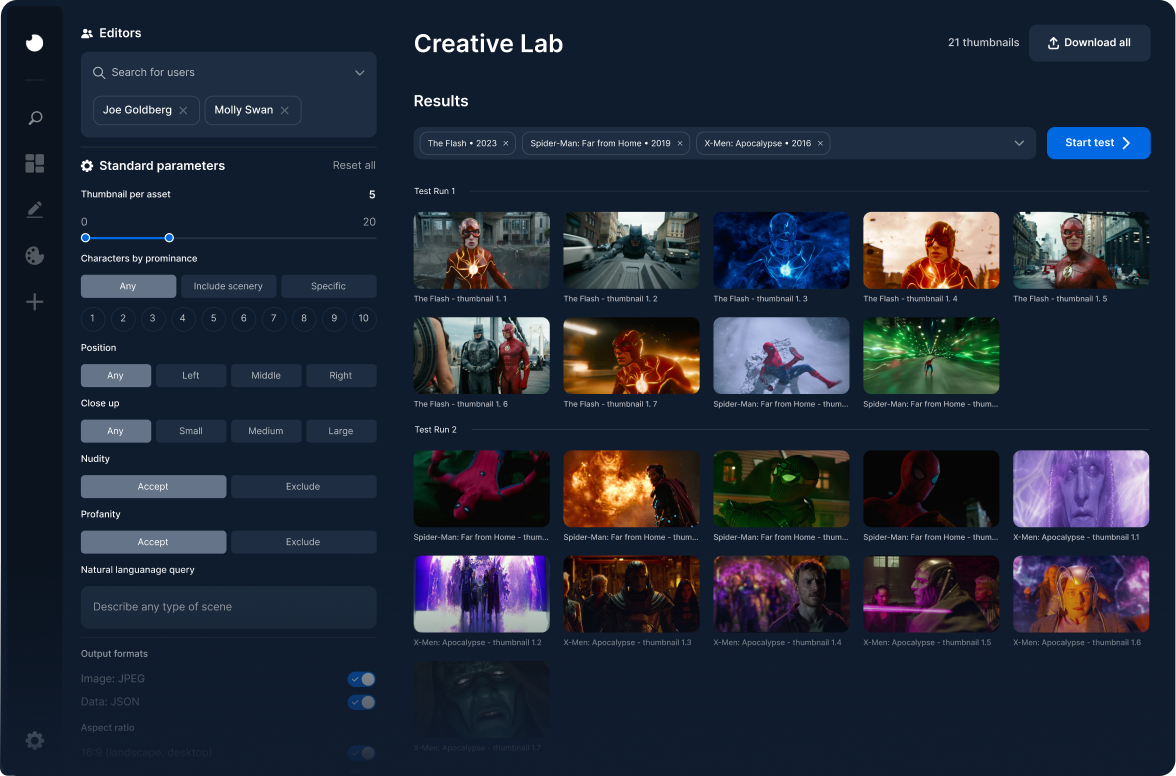

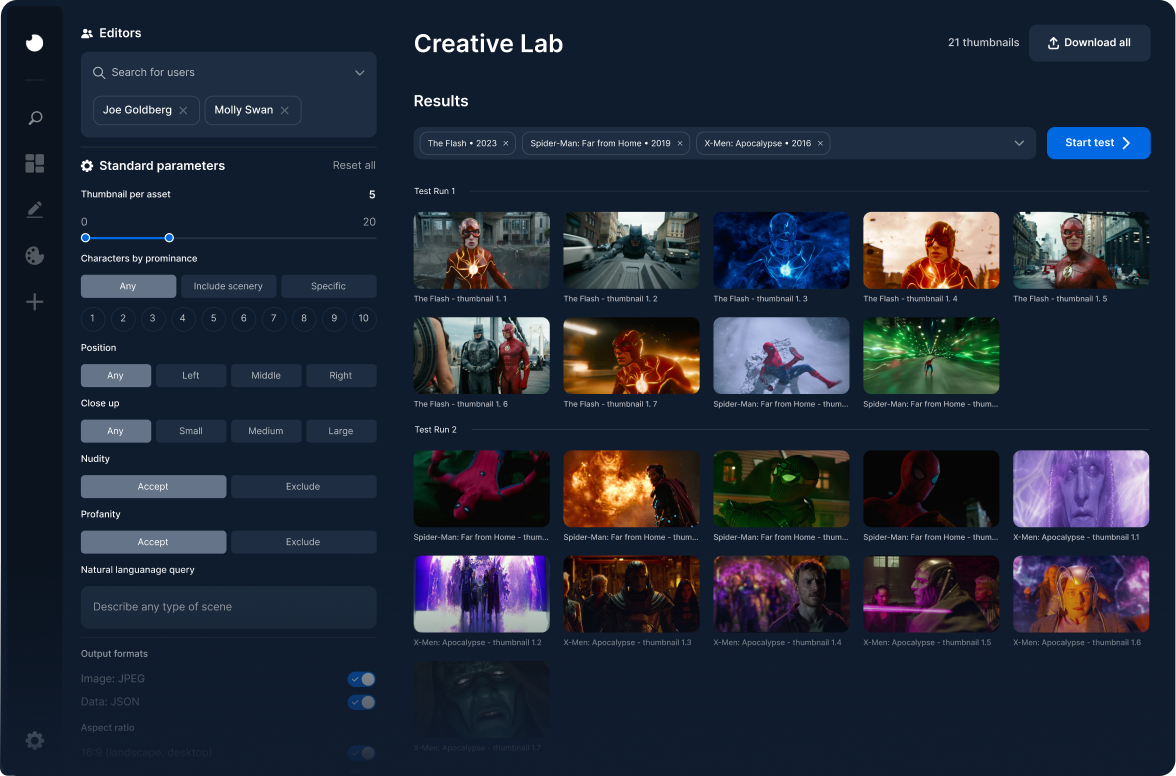

Creative Lab

Visuals That Make Viewers Click

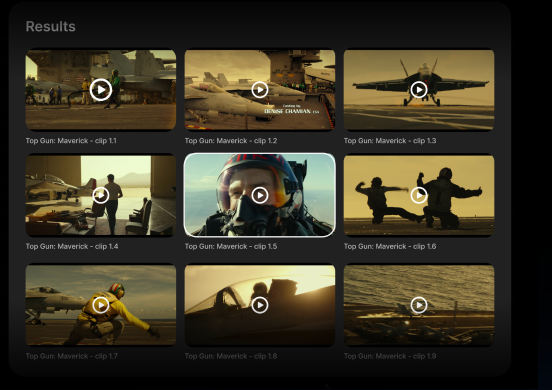

Generate mood-matched thumbnails, preview clips, and shorts. Define creative recipes by mood, character, or key moments. Apply across your entire library. From 16:9 to 9:16.

Recipe-based thumbnail & clip generation

Character & celebrity tracking + intelligent reframing

Multi-format: web, mobile, social

Collaborative review, correction & approval

Learn more about Creative Lab

Operations Lab

Automate What Slows You Down

Detect intros, recaps, and credits at 98% accuracy. Find natural ad-break moments. Serve contextual ads with scene-level tags. All at scale, zero manual effort.

Intro/recap/credit detection – 98% accuracy

Story-aware and brand-safe ad-break inventory creation

Contextual targeting (IAB, mood, keywords)

46% churn reduction with AI-optimized ad breaks

Learn more about Operations Lab

How Vionlabs Transforms Your Content

Domain-specific AI built for entertainment. Not a generic wrapper.

Our models are trained on entertainment content and understand stories, characters, emotions, and structure – just like a human viewer.

1

Ingest

On-Prem, Secure, Multimodal

We deploy on-prem within your infrastructure – no assets ever leave your environment. Our AI securely ingests your video, audio, and text sources. We analyze the picture and soundtrack, and transcribe spoken dialogue in 99+ languages – all aligned on a single timeline so the AI experiences your content like your viewers do.

Deployed on-prem – your content never leaves your infrastructure

Analyzes video, audio, and dialogue simultaneously

Transcribes and aligns 99+ languages on a single timeline

Processes content the way a human viewer experiences it

2

Analyze

Content DNA Extraction

Vionlabs builds a multi-dimensional map of every moment in your content by extracting story signals across three core layers:

Video

Scenes, objects, characters, colors, style, composition, visual aesthetics

Audio

Music, speech, sound effects, tension, emotional tone, pacing cues

Text

Dialogue, keywords, context, narrative cues, 99+ languages

These signals are fused into specialized embeddings – compact, multimodal vectors that encode the “essence” of scenes, episodes, or entire catalogs. This allows similarity search, clustering, and comparison across different levels of granularity.

3

Understand

Story-Aware AI Models

While most AI models are built for general tasks, Vionlabs trains its models specifically on stories and content from media and entertainment – building domain-specific AI with a clear purpose. Our models interpret content with cultural, emotional, and cinematic understanding through an editorial lens:

Emotional Arc Prediction

Maps the emotional journey of a scene, episode, or series

Narrative Segmentation

Identifies story structure, act breaks, and turning points

Character Modeling & Relationships

Tracks characters and understands their dynamics

Genre, Theme & Mood Classification

Goes beyond genre tags to mood, themes, and editorial signals

Scene Pacing Analysis

Measures tension, rhythm, and intensity at the scene level

Compliance & Topic Detection

Flags sensitive content and maps IAB topics for ad safety

This specialization allows Vionlabs AI to deliver metadata and insights that feel human-level and production-ready.

4

Apply

Story-Aware AI Models

All structured intelligence is stored as metadata, accessible through APIs and designed to integrate directly into your broadcast and streaming workflows. It drives the entire Vionlabs product suite

Explore Our AI in Depth →

1

Ingest

On-Prem, Secure, Multimodal

We deploy on-prem within your infrastructure – no assets ever leave your environment. Our AI securely ingests your video, audio, and text sources. We analyze the picture and soundtrack, and transcribe spoken dialogue in 99+ languages – all aligned on a single timeline so the AI experiences your content like your viewers do.

Deployed on-prem – your content never leaves your infrastructure

Analyzes video, audio, and dialogue simultaneously

Transcribes and aligns 99+ languages on a single timeline

Processes content the way a human viewer experiences it

2

Analyze

Content DNA Extraction

Vionlabs builds a multi-dimensional map of every moment in your content by extracting story signals across three core layers:

Video

Scenes, objects, characters, colors, style, composition, visual aesthetics

Audio

Music, speech, sound effects, tension, emotional tone, pacing cues

Text

Dialogue, keywords, context, narrative cues, 99+ languages

These signals are fused into specialized embeddings – compact, multimodal vectors that encode the “essence” of scenes, episodes, or entire catalogs. This allows similarity search, clustering, and comparison across different levels of granularity.

3

Understand

Story-Aware AI Models

While most AI models are built for general tasks, Vionlabs trains its models specifically on stories and content from media and entertainment – building domain-specific AI with a clear purpose. Our models interpret content with cultural, emotional, and cinematic understanding through an editorial lens:

Emotional Arc Prediction

Maps the emotional journey of a scene, episode, or series

Narrative Segmentation

Identifies story structure, act breaks, and turning points

Character Modeling & Relationships

Tracks characters and understands their dynamics

Genre, Theme & Mood Classification

Goes beyond genre tags to mood, themes, and editorial signals

Scene Pacing Analysis

Measures tension, rhythm, and intensity at the scene level

Compliance & Topic Detection

Flags sensitive content and maps IAB topics for ad safety

This specialization allows Vionlabs AI to deliver metadata and insights that feel human-level and production-ready.

4

Apply

Story-Aware AI Models

All structured intelligence is stored as metadata, accessible through APIs and designed to integrate directly into your broadcast and streaming workflows. It drives the entire Vionlabs product suite

Explore Our AI in Depth →

Integrate Vionlabs across your ecosystem

Connect Vionlabs seamlessly with your existing platforms and media workflows to activate our data wherever you operate.

On-Prem Deployment

REST API

Cloud & Hybrid

Award-Winning AI for Media & Entertainment: